✅ Common Causes of Search Engine Indexing Problems

If you’ve ever published a page and waited weeks or even months to see it appear in Google Search, you know how frustrating indexing issues can be. When a page isn’t indexed, it doesn’t matter how valuable your content is—it simply won’t show up in search results.

Search engine indexing problems are surprisingly common, and they can affect sites of all sizes, from blogs to massive e-commerce stores. Understanding why pages fail to get indexed is the first step toward fixing the problem and improving your SEO performance.

Here’s a detailed guide to the most common causes of search engine indexing problems and what they mean for your website.

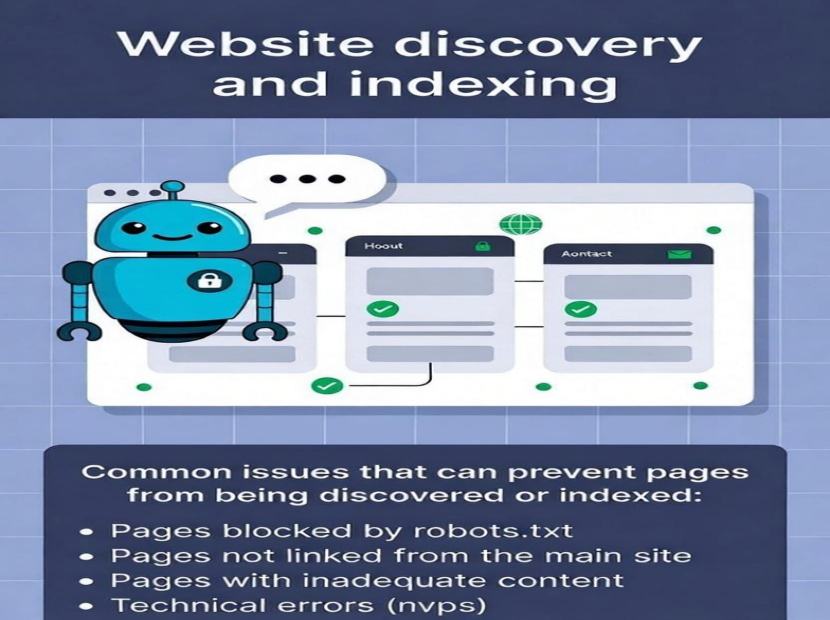

Poor Discoverability: Google Can’t Find Your Pages

Before Google can index a page, it has to know it exists. If your content isn’t easily discoverable, indexing may never happen.

- - Why pages might be hard to find:

-

- Orphan pages with no internal links pointing to them

-

- Deeply nested pages buried in a complicated site structure

-

- Missing or outdated XML sitemaps

-

- Few or no external backlinks pointing to the page

Even if your content is high-quality, if Google doesn’t see a path to it, it won’t index it. Ensuring pages are linked properly and included in a sitemap is crucial for discoverability.

2. Crawlability Issues: Google Can’t Access Your Pages

Sometimes Google knows a page exists but cannot crawl it. If crawlers can’t access your content, indexing will fail.

Common crawlability issues include:

-

- robots.txt accidentally blocking important pages or folders

-

- tags left on live pages

-

- 404 (not found) or 500 (server error) responses

-

- Redirect chains or redirect loops

-

- Blocked CSS or JavaScript that prevents Google from rendering the page

Crawlability issues are usually technical, but they’re one of the main reasons pages remain unindexed. Regular technical audits can help detect these issues before they affect your SEO.

3. Slow or Unreliable Server Performance

Google prioritizes websites that are fast and reliable. If your server responds slowly or frequently fails, Google may reduce crawl frequency or stop crawling altogether.

Server-related problems that impact indexing:

-

- High page load times

-

- Frequent server errors or downtime

-

- Overloaded shared hosting accounts

-

- Poor hosting infrastructure

Websites with slow servers not only face indexing delays but also risk lower rankings, because page speed is an important ranking signal.

4. Thin or Low-Quality Content

Google prefers indexing content that is useful, informative, and unique. Pages that provide little value or duplicate existing content may be skipped.

Examples of thin or low-value content:

-

- Very short pages with minimal information

-

- Duplicate content copied from other pages or websites

-

- Auto-generated content with little real insight

-

- Pages with unclear purpose or weak search intent

Creating rich, unique, and helpful content increases the likelihood that Google will index your pages quickly and rank them well.

5. Confusing Signals: Duplicate Content and Canonical Issues

Sometimes pages aren’t indexed because Google isn’t sure which version of a page to include. Conflicting signals about page authority or duplication can cause problems.

Typical issues:

-

- Incorrect canonical tags pointing to the wrong URL

-

- Multiple URLs with identical or near-identical content

-

- Pagination, category pages, or faceted navigation creating duplicate signals

-

- Misconfigured hreflang tags for multilingual sites

Resolving these conflicts helps Google understand which page to index and prevents important content from being ignored.

6. Weak Internal Linking Structure

Internal links are like signposts for search engines, showing which pages are important and helping crawlers navigate your site. Weak internal linking can prevent Google from finding and indexing key pages.

Problems caused by poor internal linking:

-

- Pages with few or no internal links remain “orphaned”

-

- Important pages buried too deep in the site hierarchy

-

- Broken links that stop crawlers from reaching content

A thoughtful internal linking strategy ensures that all your important pages are easily accessible to both users and search engines.

7. JavaScript and Rendering Problems

Websites built with JavaScript-heavy frameworks—like React, Angular, or Vue—can sometimes hide content from Google if it isn’t rendered properly.

Rendering-related issues:

-

- Lazy-loaded content that Googlebot can’t see

-

- Dynamically loaded content that appears too late

-

- Single-page applications (SPAs) not showing full content to crawlers

Modern SEO often requires ensuring that JavaScript content is visible to search engines, either through server-side rendering or other techniques.

8. Crawl Budget Limitations (Large Websites)

For very large websites, Google allocates a “crawl budget,” which is the number of pages it will crawl on your site within a given timeframe. If your budget is wasted, important pages may never be crawled or indexed.

How crawl budget can be wasted:

-

- Duplicate URLs or excessive URL parameters

-

- Tag pages, filter pages, or faceted navigation that adds little value

-

- Infinite scroll or calendar pages that create crawling loops

Optimizing your crawl budget ensures that Google focuses on pages that truly matter.

9. New or Low-Authority Websites

New websites or those with limited authority often face slower indexing because Google takes time to evaluate trustworthiness.

Factors affecting new/low-authority sites:

- Few backlinks

-

- Low brand presence

-

- Minimal indexed content

Building authority through quality content, backlinks, and consistent site structure can help speed up indexing over time.

10. Security or Policy Issues

In rare cases, indexing issues are caused by serious problems like malware or manual penalties.

Potential causes:

-

- Malware or hacked pages

-

- Manual actions from Google for spammy practices

-

- Security warnings preventing Google from crawling

These issues require urgent attention, as they can block indexing entirely or damage your site’s reputation.

Conclusion: Understanding the Root Causes is Key

Most indexing problems fall into one of four categories:

-

- Google can’t find your page – improve discoverability.

-

- Google can’t crawl your page – fix technical barriers.

-

- Google doesn’t value the page – improve content quality.

-

- Google receives conflicting signals – resolve duplicates, canonicals, and internal links.

By identifying the exact reason your pages aren’t indexed, you can take targeted action to fix the issue. Regular audits, strong content, proper site architecture, and technical optimization are essential to ensure your pages are indexed quickly and consistently.

Proper indexing is the foundation of search visibility and long-term SEO success, so understanding these causes is the first step toward building a site that search engines can fully recognize and trust.